A cloud-native order management can boost speed, scale, and operational efficiency

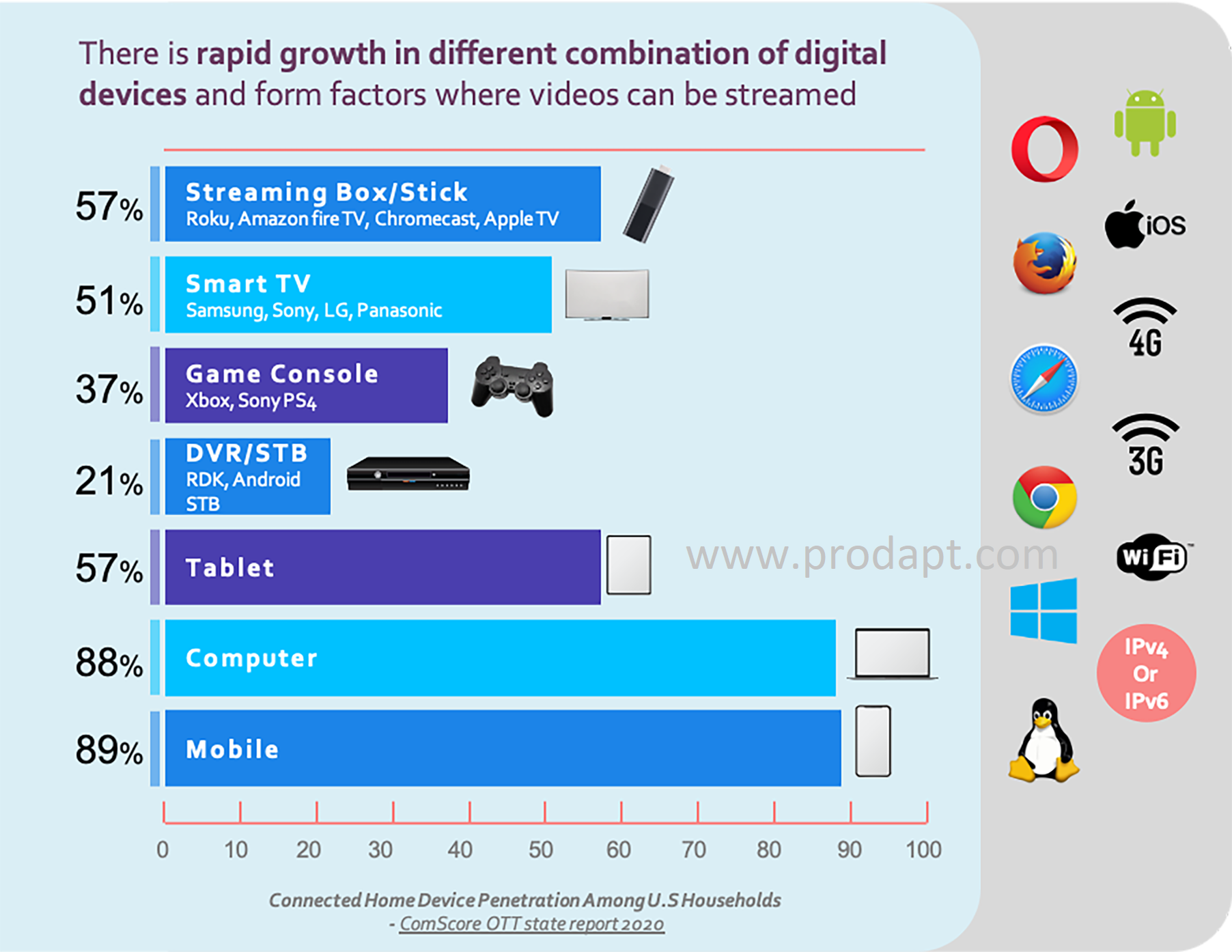

Fulfilling customer orders timely and accurately has always been critical for businesses to succeed. But achieving this has become a lot harder with rising customer expectations in the digital era. Today’s consumers seek instant gratification. They want new digital services enabled instantly on the device of their choice, on any platform over the phone or online – all of these with as little friction as possible.

So, what stops businesses from exceeding their customer expectations while fulfilling orders? Why are there high order fallouts and failure to meet the promised due date of order activation? Why are the businesses not able to customize and deliver new product offerings quickly as per the varied needs of their customer? Even if they do so, why does it become so costly and time-consuming?

The core problem lies within the legacy order management application that has grown like a huge elephant over time – making the entire ecosystem more complex and rigid to process new orders. It stifles innovation and drives up costs. To overcome this, leading businesses have started their journey to transform legacy order management.

Cloud-native digital platform for order management boosts service providers’ speed, scale, and operational efficiency, enabling them to thrive in the digital era.

A cloud-native digital platform is an ideal transformation approach that can boost speed, scale, and operational efficiency. But that is easier said than done. Businesses need to re-construct the application ground up, which means the entire order management stack should be rewritten from scratch, including key applications like order capture, order execution, product catalog, asset management, user documentation, notifications, and more.