Enable 5X transparency in AIOps, achieving a more reliable and accurate business outcome

Service providers in the connectedness vertical embrace Artificial Intelligence for IT Operations (AIOps) to transform their businesses, but the users are hesitant in entrusting their operations to a complexly driven platform that provides no clarity and visibility into its functionality. Due to the lack of transparency, service providers are concerned about making bad decisions based on AI recommendations and the liability of such decisions and actions.

In their quest for autonomous operations, service providers seek to be more proactive with predictive analytics, where the machines make most of the decisions and help engineers take preemptive actions. However, the engineers need to have complete visibility into the underlying logic used by the AIOps and the ability to validate if the outcome is reliable.

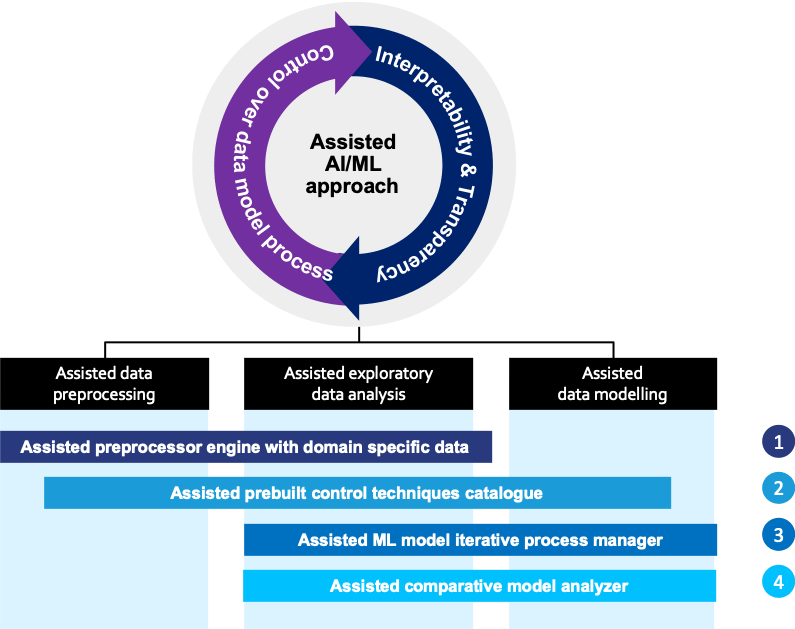

Figure1: Assisted Artificial Intelligence and Machine Learning Framework

To accelerate AI/ML model development with enhanced transparency, enterprises must switch from existing auto-machine learning to assisted AI/ML framework-based solutions.

Explainable Machine Learning (ML) models aim to solve this problem by explaining the logic of the AIOps solutions so that the users can easily understand the outcome. The model explains the application of the AI solution and its result to the users in a way that they can clearly understand, rely on, and trust the outcome. Explanation in the ML model can be viewed as a means to transforming a black-box AIOps into a glass-box AIOps, by precisely lifting the veil on its computing and logic.