Leverage RPA and AI to build and implement a proactive two-way Conversational Framework to reduce OpEx, boost agent productivity and improve NPS

According to recent statistics, 30% of the service providers’ contact center calls are network outage related. Their inability to predict these outages on time and provide prior information to the customers results in contact center call spikes, customer dissatisfaction and a low NPS score. This also increases the OpEx for contact centers and may lead to a reputational loss for service providers.

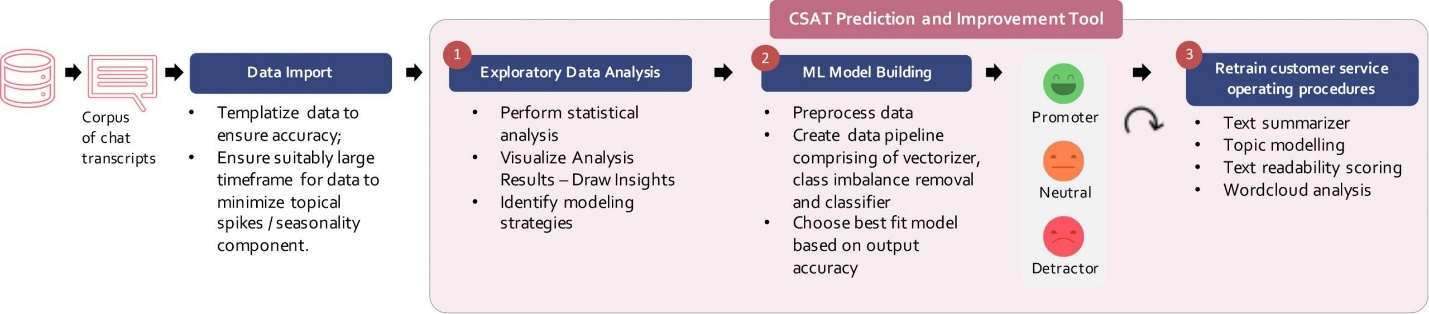

To overcome these challenges and improve NPS, service providers must create a central Intelligent platform capable of orchestrating seamless conversation between the contact centers and customers. This is established by implementing a “Two-way conversational Framework”. The steps involved are:

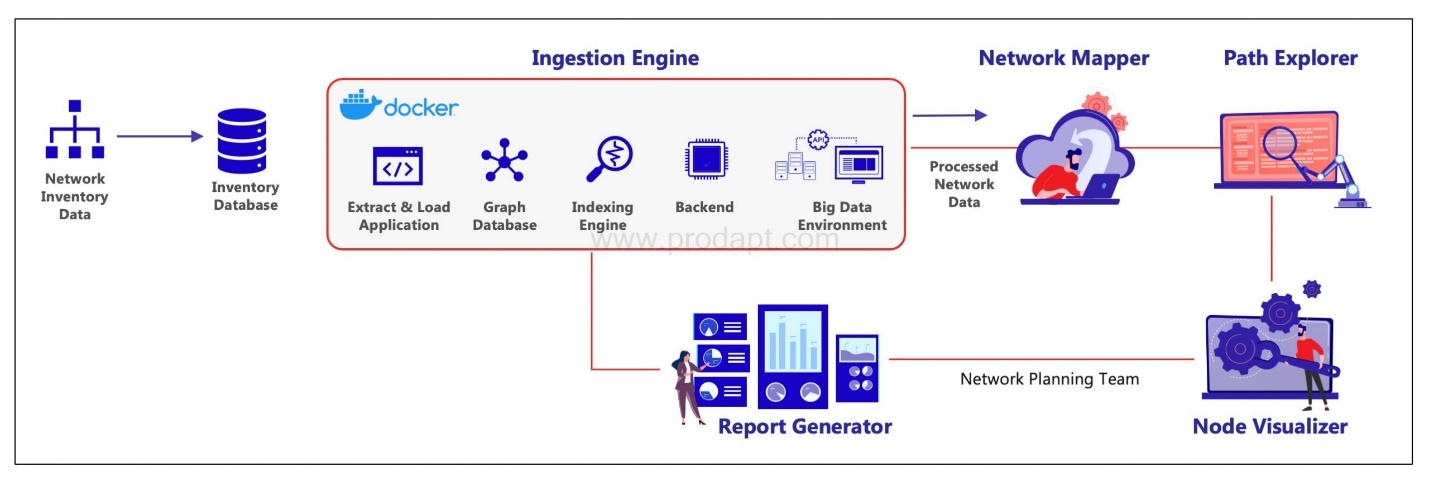

- Step 1: Auto-identification of outage information

Build a standardized process to identify relevant outages in the network monitoring systems. Integrate them with an outage monitoring dashboard for BOT to auto-extract outages and store them in a central database. - Step 2: Schedule notification

Perform automated validation and intelligent scheduling to send proactive notifications to the impacted customers in a well-organized structure. - Step 3: Notify and engage with customers using a Conversational AI BOT

Send proactive notifications, and if the customer has additional queries, the bot can engage in a conversation using the conversational AI

Conversational AI Bot orchestrates bi-directional communication and provides seamless customer experience during common network outages.