Realizing the trustworthiness of AI systems

Implement AI reliability scorecard to accelerate trusted decision-making

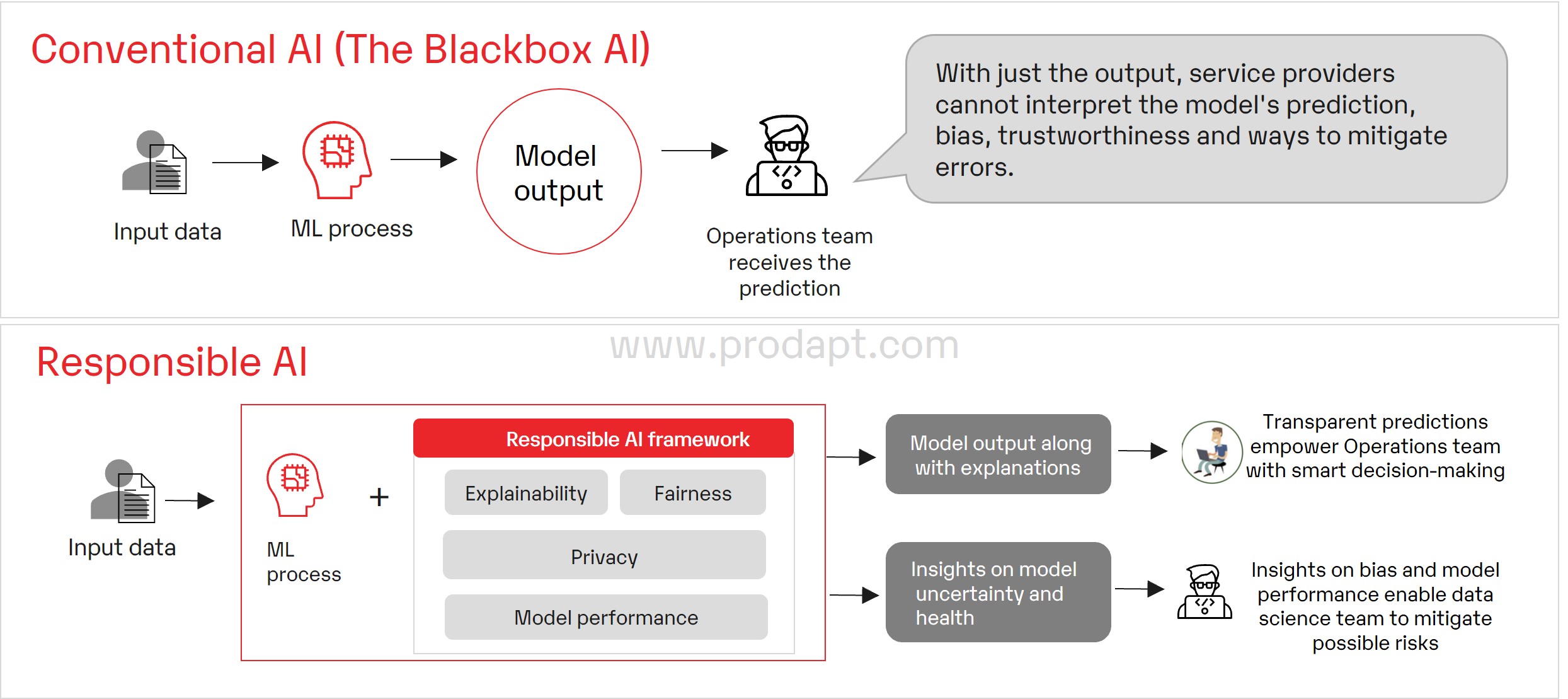

Service providers in the Connectedness industry are increasingly relying on digital technologies such as Artificial Intelligence (AI) to remain competitive. However, AI requires a high level of trust because of questions surrounding its fairness, explainability, and security. Ensuring trust is a priority, as lack of trust can be the biggest obstacle to the widespread adoption of AI.

AI implementations today lack mechanisms to arrive at fair and interpretable predictions, understand the working of complex ML models, and secure the model against adversarial attacks leading to the leakage of Personally Identifiable Information (PII). Also, it is found that most organizations are challenged in ensuring their AI is trustworthy and responsible, such as reducing bias, tracking performance variations and model drift, and making sure they can explain AI-powered decisions.

As the service providers struggle to address the risks arising from bias and privacy issues, implementing Responsible AI assists in recognizing, preparing, and mitigating the potential effects of AI. It also improves transparent communication, end-user trust, model auditability, and the productive use of AI.

Fig: Responsible AI: The key to achieve a trustworthy AI system

As the service providers struggle to address the risks arising from bias and privacy issues, implementing Responsible AI helps prepare and mitigate the potential effects of AI